Evaluating learning experiences

In higher education, educators are consistently developing a variety of learning and teaching experiences, including courses, subjects, lectures and tutorials. Evaluating these experiences is essential to ensure the desired outcomes are met. Evaluations serve multiple purposes, such as:

- Analysing the effectiveness of an activity or assessment.

- Conducting course reviews.

- Supporting research.

- Preparing evidence for continuing professional development.

As stated in the UOW Assessment and Feedback policy, quality assurance processes must be in place “to ensure the appropriateness and quality of assessment meets the standards required by the University and fosters a culture of continuous improvement.”

The Kirkpatrick Model of Evaluation provides a framework to assess learning events and identify the necessary evidence to guide effective change. By using this model, we can shape the future of our learning and teaching practices, ensuring that these evaluations drive meaningful improvements.

Why?

Evaluating learning experiences ensures that aims are being met. The type of evidence collected determines the depth of evaluation and the assurance of achieving these goals. For example, student surveys offer insight into how an experience is perceived, but they are limited in evaluating if learning has occurred. Additional data, such as learner performance on assessment tasks or the application of skills following the learning experience, provides stronger indicators of learning.

How?

Typically, learning evaluation is thought of as feedback from student surveys at the end of a session, or overall performance on assessment tasks. However, these are not the only ways to obtain data for evaluating the success of a learning experience.

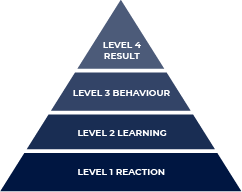

The Kirkpatrick Model of Evaluation provides a structured framework for assessing various types of evidence in learning and teaching experiences. The model consists of four levels of evidence, each aimed at evaluating different aspects of the learning experience.

Level 1: Reaction

Focuses on learners’ perceptions and feelings about a learning event. This is the most basic level, where most evaluations tend to conclude.

Level 2: Learning

Evaluates the degree to which learners achieve desired learning outcomes and demonstrate the required skills, knowledge, and attitudes. This evaluation is typically conducted through formative and summative assessments within a learning experience.

Level 3: Behaviour

Assesses the extent to which learners demonstrate a change in behaviour following a learning experience. The purpose at this stage is to measure knowledge transfer and its application beyond the learning environment.

Level 4: Results

Measures the overall success of a learning event. This can include metrics such as the returns on expectations and investment, as well as organisational or cultural change.

Using the Kirkpatrick Framework to create an Evaluation Strategy

The Kirkpatrick Framework serves as a foundation for planning an evaluation strategy, which should be embedded in the holistic design of learning experiences. When designing your learning event, consider the goals and the types of evidence you could collect at each level of the Kirkpatrick Framework to support a thorough evaluation. For an example of this mapping process, refer to Praslova, (2010), pp. 222-223.

Related information

The following resources link to specific UOW initiatives and processes to evaluate learning and teaching, as well as facilitating quality assurance in UOW courses and subjects:

- Student evaluation of learning and teaching | UOW Intranet

- Accreditation for Learning and Teaching (CPD Portfolio) | UOW Intranet

- Peer Review (LTC) | UOW Intranet

References

Cahapay, M.B. (2021). Kirkpatrick model: Its limitations as used in higher education. International Journal of Assessment Tools in Education, 8(1), 135-144. https://doi.org/10.21449/ijate.856143

Mind Tools. (n.d.). Kirkpatrick’s four-level training evaluation model: Analyzing learning effectiveness. Retrieved February 17, 2022 from https://www.mindtools.com/pages/article/kirkpatrick.htm

Praslova, L. (2010). Adaptation of Kirkpatrick’s four level model of training criteria to assessment of learning outcomes and program evaluation in higher education. Educational Assessment Evaluation and Accountability, 2010(22), 215-225. https://doi-org.ezproxy.uow.edu.au/10.1007/s11092-010-9098-7