Gen AI efficiencies in feedback practices

Providing individualised feedback on student assessment tasks is time consuming, and it can be tempting for educators to reuse the exact same feedback statements across multiple assignments to save time. However, students quickly notice when comments feel generic or repetitive, especially if they compare their feedback with peers. This can leave students feeling undervalued and disengaged, as the feedback doesn’t seem tailored to their individual effort or needs.

Generative artificial intelligence (gen AI) tools offer a way to work smarter, enabling educators to provide feedback that avoids repetitive wording but carries the same meaning and intention.

How?

The following is a step-by-step example of how to use a gen AI tool to create varied feedback comments efficiently. However, please note these two important points:

- Due to data security and privacy risks, educators should never upload student work to a gen AI tool.

- The process described below is intended to create time efficiencies by generating initial variations of feedback comments. Educators should ensure that comments are sufficiently personalised to each student. The gen AI tool’s output should be treated as a starting point to be customised, such as including students’ names.

Note: For additional guidance, please refer to the L&T Hub article, Constructing effective feedback.

Each of the examples on this page can be implemented using the Copilot for the Web. When logged in using UOW credentials, Copilot for the Web provides institutional data protection and ensuring your data is not saved or used to train the model.

Click on the headings below to expand/collapse the section.

The best approach to prompting in a gen AI tool is to:

- Specify the role you want the tool to assume;

- outline the task;

- and provide clear instructions.

The more detailed you can be, the better the result. For example, consider the following prompt in Microsoft Copilot for Web:

“You are a university lecturer who recognises the importance of providing clear feedback to students. You provide responses in Australian English spelling.

I am marking a set of student assignments and I want to include a statement in my concluding feedback that lets students know that I offer them the opportunity to get further highly personalised feedback through a zoom consultation, and that they should email me to request a consultation and provide suggestions of three days and times that suit them.

Please create a total of 5 different statements based on my request. Please ensure that you keep the intention and meaning of the phrase the same across the different variations.”

In this example, the prompt clearly specifies:

- The role (university lecturer),

- the task (creating 5 versions of a feedback statement),

- and the instructions (giving it the topic, telling it you want to ensure the intention and meaning stays the same).

The gen AI tool may not provide the exact results you are looking for on the first attempt. You might need to work with it by asking it to generate more sentences or suggesting the removal of certain specifics. For example, when Copilot is asked to generate five versions, this was the result:

“Certainly! Here are five different statements you can use in your concluding feedback:

- If you would like more personalised feedback, I am available for Zoom consultations. Please email me to arrange a session and suggest three days and times that suit you.

- For those seeking further detailed feedback, I offer Zoom consultations. Kindly email me to schedule a meeting and include three preferred days and times.

- I am happy to provide additional personalised feedback through Zoom consultations. Please reach out via email to set up a time, and suggest three possible days and times that work for you.

- Should you need more in-depth feedback, I am available for Zoom consultations. Email me to book a session, including three days and times that are convenient for you."

- To receive further tailored feedback, I offer Zoom consultations. Please email me to arrange a consultation and provide three suitable days and times.

Feel free to use any of these variations to communicate your availability for further feedback effectively!”

In this example, we can then ask Copilot to refine #2:

Prompt:

“Thank you. Can you please amend #2 so that it doesn't sound generic with the "for those seeking…”?”

Response:

“Of course! Here's the revised version of statement #2:

If you would like more detailed feedback, I offer Zoom consultations. Kindly email me to schedule a meeting and include three preferred days and times.

I hope this works better for you!”

This is an example of creating multiple versions of general feedback statements.

Building on the above example, you can go one step further by generating examples for more specific feedback points that are related to a criterion in a marking rubric.

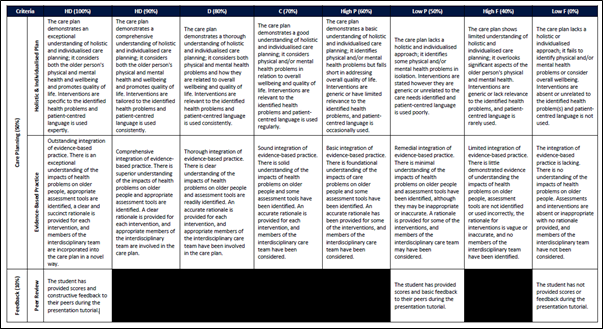

For example, the image below is a sample marking rubric for an assessment task:

The gen AI tool can generate varied comments tailored to particular performance level descriptors. In this example, the gen AI tool Microsoft Copilot for Web is used to provide four variations of feedback comments for each performance level of the ‘Peer review’ criterion.

“You are a university lecturer who is marking student assignments. You excel in providing learner-centred feedback (with Australian English spelling), that adheres to the following evidence-based principles (as developed in academic papers by Tracii Ryan and colleagues): focus on future impact; promote learner agency; and facilitate learner sensemaking. You ensure that your feedback has a positive tone, is encouraging, and specific.

In the attached file there is an assignment marking rubric. For the "Peer review" criterion, for each available performance level, ie. HD (100%); Low P (50%); and Low F (0%), please create 4 variations of a feedback comment that aligns with the descriptor for each performance level. Please ensure that you keep the intention and meaning of the descriptor the same across the different variations. Marking rubric.pdf”

And here are some of the results, specifically what was generated for the HD and Low P level descriptors:

“Here are four variations of feedback comments for each performance level of the "Peer review" criterion, based on the provided rubric:

HD (100%)

- Outstanding work! Your scores and constructive feedback during the presentation tutorial were highly insightful and beneficial for your peers. Keep up the excellent effort in providing detailed and thoughtful reviews.

- Excellent job! You provided comprehensive scores and valuable feedback that greatly assisted your peers in understanding their strengths and areas for improvement. Your attention to detail is commendable.

- Fantastic effort! Your peer reviews were thorough and constructive, offering meaningful insights that will help your peers enhance their work. Your ability to provide detailed feedback is impressive.

- Great work! You delivered well-considered scores and constructive feedback that were very helpful to your peers. Your dedication to providing quality reviews is evident and appreciated.

Low P (50%)

- Good attempt! You provided scores and some basic feedback during the presentation tutorial. To improve, try to offer more detailed and constructive comments that can help your peers grow.

- Nice effort! You gave scores and basic feedback, which is a good start. For future reviews, consider adding more specific suggestions to help your peers understand how they can improve.

- Well done! You provided scores and basic feedback. To enhance your reviews, aim to include more detailed and constructive comments that can guide your peers in their learning journey.

- Solid start! You offered scores and basic feedback. Moving forward, try to provide more in-depth and constructive feedback to better support your peers' development.”

Reviewing these suggestions, it is clear that the learner-centred principles have been considered, and the feedback links nicely with the descriptors for the performance levels of the ‘Peer review’ criterion in the rubric.

As well as the examples presented above, you can leverage a gen AI tool to quickly draft feedback for correct and incorrect answers in multiple choice questions. The updated version of the UOW Assessment and Feedback Policy has specific guidance on quiz feedback:

“For quizzes, feedback must be provided as either individual or general feedback to the entire cohort to enable student learning. For online quizzes, Subject Coordinators are encouraged to utilise the feedback tools available in the Learning Management System. To strengthen the academic integrity of the assessment, results and feedback must not be released until after the quiz has closed.”

- UOW Assessment and Feedback Policy

When using a gen AI tool that doesn’t save your data or use it to train the underlying model (such as Microsoft Copilot for Web) you can input your quiz questions and request feedback for both correct and incorrect answers. This feedback can then be added to the corresponding questions in your Moodle Question Bank. Remember to always check the accuracy of the feedback before using it, and refine the gen AI responses using your expertise and judgement.